As artificial intelligence continues to advance rapidly, one area generating significant interest and debate is AI-generated content. Whether it’s essays, articles, or even poetry, sophisticated language models like ChatGPT can create prose so fluent that it’s difficult to differentiate from human writing. This brings us to the need for AI content detectors—tools designed to assess whether a piece of text was written by a human or an AI model.

So, how do these detectors work? As a data scientist working with natural language processing, I’ll guide you through the technical foundation that makes these detection systems function and explain their limitations, strengths, and ongoing evolution.

The Core Principle: Statistical Analysis of Text Patterns

AI content detectors primarily analyze the statistical patterns and linguistic signatures embedded in a piece of writing. These tools don’t “understand” content in the way that humans do—they rely on numbers, probabilities, and patterns.

Key areas content detectors evaluate include:

- Token distribution: AI-generated text tends to have uniform word and sentence structure. Detectors analyze how diverse the vocabulary is and compare it to typical human writing patterns.

- Perplexity: This is a measure of how predictable a piece of text is. AI-generated text often has lower perplexity because it follows a statistical model trained on vast datasets, making it more predictable than human-written content.

- Burstiness: Human writing typically has bursts of complexity and variation. In contrast, AI writing may lack this kind of variability. Detectors scan for such natural inconsistencies.

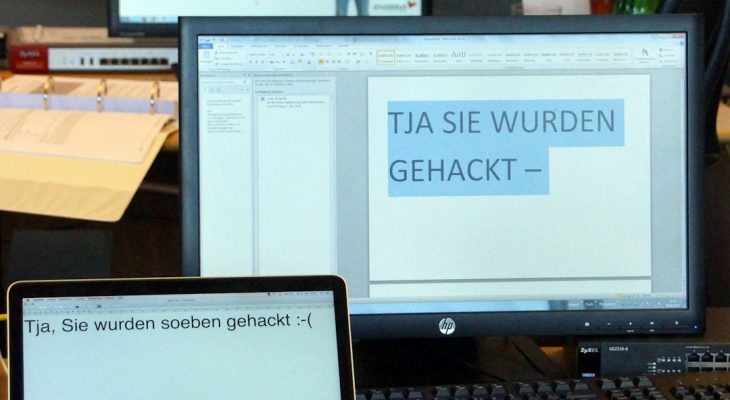

[ai-img]ai detection, natural language processing, screen showing text analysis[/ai-img]

Machine Learning Classification Models

Many AI content detectors are built upon machine learning classifiers. These models are trained on a large corpus of both human-written and AI-generated content. By feeding labeled data into algorithms like logistic regression, decision trees, or neural networks, the models learn to distinguish subtle differences.

Commonly used models and architectures:

- Transformer-based classifiers: These include lighter versions of models like BERT or RoBERTa fine-tuned specifically for detection tasks.

- N-gram analysis: Some simpler detection tools rely on analyzing the frequency of consecutive word pairs or triplets, which often differ between human and AI-generated text.

- Custom heuristics: Rule-based systems still exist, especially in conjunction with statistical models, to flag content based on specific syntactical clues or semantic repetition.

The output is often a score or probability indicating how likely the content is AI-generated. However, it’s important to note these scores are not binary truths; they’re probabilities based on pattern recognition.

Strengths and Limitations of Detection Tools

Despite the impressive capabilities, AI content detectors are not foolproof. They work well in many cases but struggle in certain scenarios.

Strengths:

- High detection accuracy for longer texts or content generated using older AI models.

- Can support academic and professional integrity efforts by flagging suspicious text.

Limitations:

- Short texts are harder to assess due to limited data.

- Some AI models can be fine-tuned or prompted to mimic human writing styles effectively, lowering detector accuracy.

- False positives are possible—creative or technical human writing could be mistakenly flagged as AI-generated.

[ai-img]confused face, ai detection weaknesses, caution symbol[/ai-img]

Improving Accuracy: Hybrid Approaches

To improve reliability, modern detection systems are starting to incorporate hybrid approaches. These combine multiple techniques—from machine learning and statistical analysis to contextual understanding using metadata.

Examples of hybrid enhancements include:

- Timestamp and behavioral analysis—looking at how long it took someone to write the text.

- Analyzing user writing history—comparing new content to previously written material by the same author.

- Cross-verification with known AI-generated output libraries for similarity checks.

Conclusion: Proceed With Caution

AI content detectors are a powerful yet imperfect solution to an emerging problem. While they provide valuable insights, they should not be taken as final arbiters of truth. In a world increasingly shaped by AI, understanding how these detectors work allows us to use them with greater discernment.

As data scientists, educators, and content creators, we must embrace both the promises and perils of this technology. The responsible use of AI content detectors lies in their supplementation—not replacement—of human judgment.